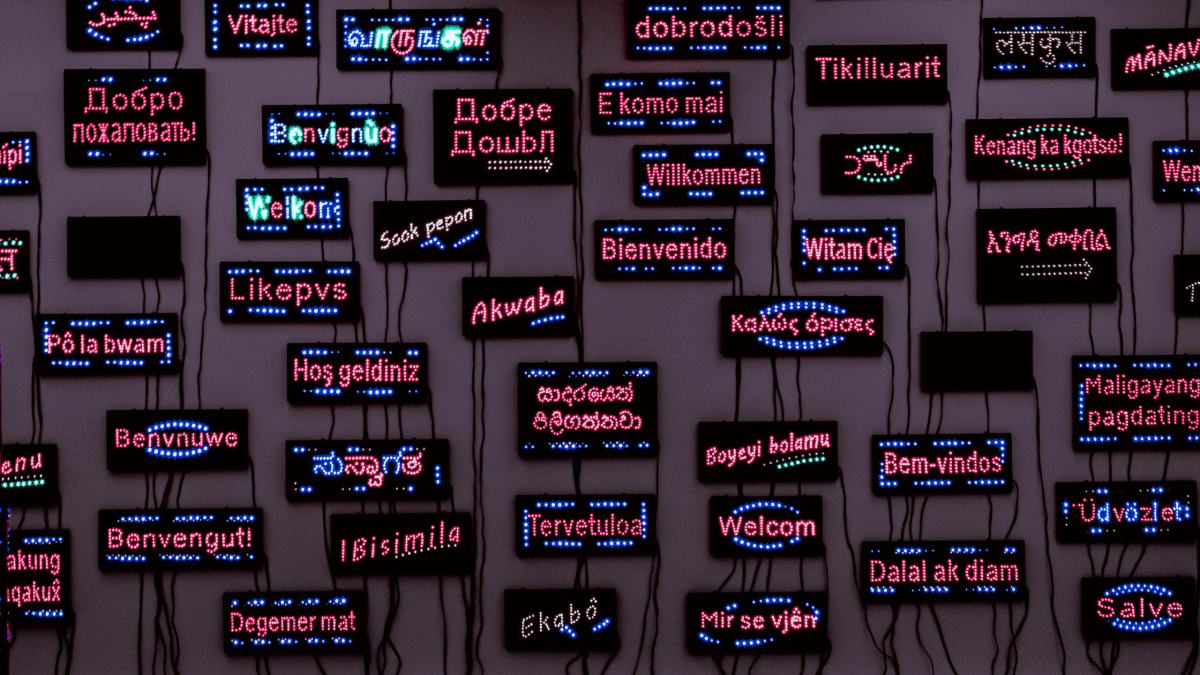

If you have been on social media lately consuming content originally produced in languages other than your own, you’re familiar with the push for dubbed audio, produced using artificial intelligence (AI). This is not a minor shift.

Meta and YouTube are doubling down on AI translation features that aim to increase reach and attract a broader audience.

And while their goal is similar, they have made design choices to implement this technology that produce very different outcomes.

Meta’s approach builds on multimodal translation research and platform integration. According to work published on SeamlessM4T, a multilingual, multimodal translation system handles speech, text and audio together. Meta’s product teams are rolling out AI translations for Reels and other short‑video formats. The emphasis is rapid cross‑language discovery: translate the clip, preserve rhythm or lip movement where possible, and let that content travel across global feeds. The result is seamless, with the same voices from the original video speaking a different language fluently.

The path for YouTube is shaped by its main role as a long‑form video publisher that has offered auto‑captions and text auto‑translate for captions for a while. But it has also been expanding auto‑dubbing and AI‑voice features recently, replacing audio with translated voices. The goal is to convert an existing video into another language quickly, so it can appear native to the language selected by the viewer. The result is extremely robotic; the cadence and inflections from the original audio are completely gone, and all voices sound the same.

The benefits and downsides of AI translations

In The Morning Show, Alex Levy, played by Jennifer Aniston, introduces a new technology to dub presenters automatically during the Olympics in Paris 2024.

The result is impressive, and while completely fictional, it is not too far from what could become reality very soon.

If you distribute content internationally, automatic translation dramatically lowers the cost and time to localize content in multiple languages.

This can expand reach and reduce entry barriers for small creators, or help publishers test new markets without upfront dubbing budgets.

For platforms and publishers the desired upsides are more watch time across regions, more content repurposed, and potentially higher ad inventory.

Meta’s multilingual model unlocks faster cross‑language reuse and YouTube’s auto‑dubbing feature allows them to reach into new language markets.

But automated results can be generic. Machine translations still struggle with nuance, humour, cultural references and domain‑specific language.

The result is translations that are technically correct but tone‑deaf, or translations that introduce errors that change meaning.

In short‑form social clips, that might be tolerable.

But in long‑form content, particularly journalism, documentary or public service content, these mistakes can backfire easily.

AI‑generated dubbing also erases the human voice. When platforms replace a creator’s inflection and timing with a synthetic voice, the subtle emotional cues that make storytelling credible can vanish.

If translations are done automatically, errors or mistranslation can mislead audiences. AI can translate or even dub speeches or clips incorrectly, altering meaning or context, creating a fertile ground for misinformation, as translation errors can introduce false claims or distort messages.

Algorithmic isolation

Algorithms don’t just curate content, they confine it. The more we engage with one type of content, the smaller our window to the world becomes.

Automatic translation funnels content into monolithic translated versions rather than enabling variety and a true cultural exchange in multiple languages.

Some viewers might prefer experiencing content in its original language to preserve nuance, or might just want to learn that language themselves, instead of getting instant translation.

In 2024, Duolingo reported that millions of learners worldwide are studying dozens of languages, showing strong appetite to engage with content beyond a mother tongue.

Content in other languages is a form of cultural engagement, not a barrier.

Artists like Bad Bunny have huge global audiences beyond native Spanish speakers, while Rosalía produced Lux, a praised album in which she sings in 13 different languages. It seems like many people are happy to consume culture in a language other than their own.

Ultimately, when every feed feels tailor‑made to your first language and every video looks inauthentic, it becomes harder to tell what’s real.

The rise of AI slop weakens what feels real

AI‑generated “slop” is low-quality content, often generic or inaccurate, that is created using artificial intelligence tools like OpenAI’s new app, Sora 2.

Endless auto‑produced videos, songs, and articles now circulate online, optimised for clicks rather than meaning or intention.

Some mimic human creativity; others mimic human emotion.

The volume alone is enough to drown out real voices.

The problem isn’t only that AI is replacing creators, it’s replacing trust.

When anyone can fabricate a news clip or a celebrity quote that feels real, even make them speak your language with the click of a button, the very foundation of shared understanding weakens.

The cultural clash

As translated audio and video become easier to generate, provenance becomes weaker. AI can fabricate speeches, stitch translated audio into a separate video clip, or create lip‑sync illusions.

In an age of algorithmic isolation, where audiences rely on narrow feeds, synthetic translations become tools for manipulation.

Forced localization may also backfire culturally. Platforms might push translations into languages the audience doesn’t want.

In conclusion, platforms pushing AI translations must always:

– Let users opt‑out of forced translation. If some audiences want original language, allow toggling between original audio and/or subtitles.

– Provide dual audio/subtitle tracks with the synthetic translation and the original language, so viewers can choose how to engage with the content.

– Use automatic translation as a draft and run regional dialect checks or a native speaker review, especially for high‑stakes content.

– Treat translations as cultural interpretations, not just literal conversions, adapting idioms, slang and culture.

Content producers and distributors must recognize that multilingual audiences can be a design factor, and that content in a foreign language can be part of a value proposition, not an obstacle.

Removing language barriers is a legitimate social good, but the mechanics of platform systems and the incentives that run them have serious risks.

AI translations erode craft and provenance when unchecked.

The future is not automated reach or human craft alone. It is a combination of both, while protecting accuracy, tone and trust.

2 thoughts on “AI translations for video reshape global understanding”